Hi everyone, the first Nymja.ai deliverable is ready ![]()

Quick Demo

You can find instructions on how to install and run the project on my Github

In this post I’m going to share the structure of the project, the main features and my plans for the next 3 months.

Also, anyone interested can contribute by:

- filling the forms I’ve created to get feedback on the UX and get suggestions from the community

- participating in the Nymja.ai Focus Group on Telegram

What is Nymja.ai v1?

Nymja.ai v1 is the first deliverable of a Nym SDK grant. It’s a project that aims to bring together multiple open-source LLMs for the convenience of the community. It started in Q4 2025 and is due to end in Q2 2025, when I will deliver a local v2 application.

Ideally, we can expand this grant to become a commercial product, serving it remotely and providing traffic privacy through a connection with the mixnet.

Right from the start, my goal was to scale this application to work remotely, which is why I developed it containerized with Docker.

At the moment, there is only one model running behind it, Llama 3.2 1B. It’s one of the lightest model currently available on the market.

In the original project proposal, I suggested using the Mistral 7B. However, my hardware limitations didn’t allow me to run this model.

However, I opted for Ollama’s docker image to manage the downloaded models. Through it, I will implement a range of lightweight models (1B, 1.5B) for the second deliverable. Mistral will unfortunately be left out (cause it’s to heavy for ordinary PC’s).

How is this project structured?

It’s a project based on Python Django for the backend and Next.js for the front. The database used is PostgreSQL and the models are managed from Ollama.

Your data is processed locally, as well as the LLM. Therefore, no third-party API is called. In addition, all conversation content is encrypted using Fernet.

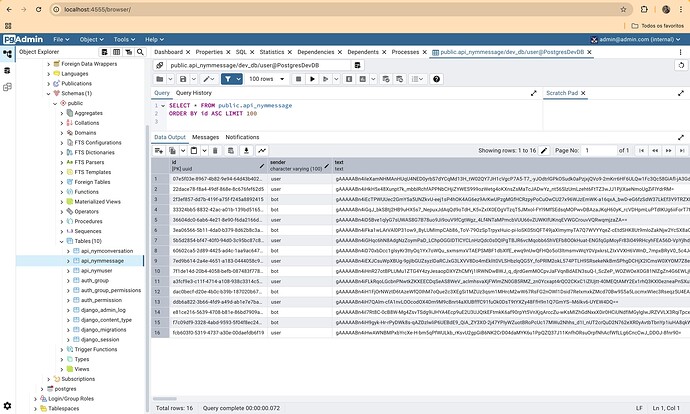

You can manage the DB via pgadmin. There’s no need to download it, a docker image of it is available on localhost:4555. Just type that in your browser after building.

The default login is “admin@admin.com” and the password “admin”. You can change this config in docker-compose and servers.json (root/backend/pgadmin-config/servers.json). Within pgadmin, the database password is “password”

A snipet of how the data is stored:

Features

Being the first version, we have these basic features:

- user registration

- login/logout

- creating and deleting new conversations

- all chat content is encrypted.

What are the next steps?

I want to take advantage of this moment to get feedback from the community and understand which features are most desired. For my part, with the second delivery I intend to

- add some lightweight AI models, so that the user can choose (possibly gemma3 1B, deepseak 1.5B, deepseak coder 1.3B, qwen2 0.5B / 1B and/or starcoder 1B).

- Context customization, leaving it up to the user to decide how many context tokens will be supported (remembering that this will run on your machine).

I’ll also start testing the service of this app remotely, during the last 3 months of the grant, using a 8gb 8vc 10gps VPS managed by me.

Final comments

I am grateful for the trust placed in me and confident that we can develop a reference project in AI, open-source, and privacy—amplifying Nym’s message and the fight for privacy by default. All suggestions are welcome, and feel free to join the Telegram group and/or fill out the forms (it really helps me!).

Lastly, I’d also like to thank my colleagues Victor Vasconcellos and EduardaDT, squad friends who helped me during the early stage of the app’s development. ![]()